Ash Wednesday

A downloadable game for Windows

A 2023 Portland Indie Game Squad Summer Slow Jam game! (Playtime: 15-25 minutes)

Cruz Flores is a perfectly normal young man living in Portland, Oregon except for one thing: he knows exactly when he's going to die.

When he meets a woman whose problems interrupt his last day alive, will he find a way to help her before his time runs out?

Trigger Warning: This game deals conversationally with the topics of mortality, illness, and fatalism.

Credits

- Programming, Writing, Art, and Music by Dominic Blais

- Voice Acting by Dominic and Kimberly Blais

Free resources used:

- "Mode 7" font by Andrew Bulhak

- "ActorCore Sample Motions" by Reallusion

- "Animal Variety Pack" by PROTOFACTOR INC

- "2013-07-16 rose garden transit.wav" by Tim Kahn

Copyright (c) 2023 by Dominic Blais. https://mmg.company

Ash Wednesday uses Unreal Engine. Unreal is a trademark or registered trademark of Epic Games, Inc. in the United States of America and elsewhere. Unreal Engine, Copyright 1998 - 2023, Epic Games, Inc. All rights reserved.

Technical Background:

This game uses an experimental game design technique that I hadn't seen in use elsewhere. The technique occurred to me while working with Stable Diffusion a couple weeks before the jam began, but this game is the first time I tried implementing it. The essence is this:

- Block out a 3D level using rudimentary shapes

- Place cameras for desired "rooms" in the level

- Render and save the depth map of each camera

- Use Stable Diffusion with ControlNet's depth map model to create room images

- In game, render the room image with any desired 3D models interspersed

The intention of this technique is to allow the game designer a very high level of creative visual freedom and fidelity in 3D scenes without expensive model creation and level design.

Detailed Description:

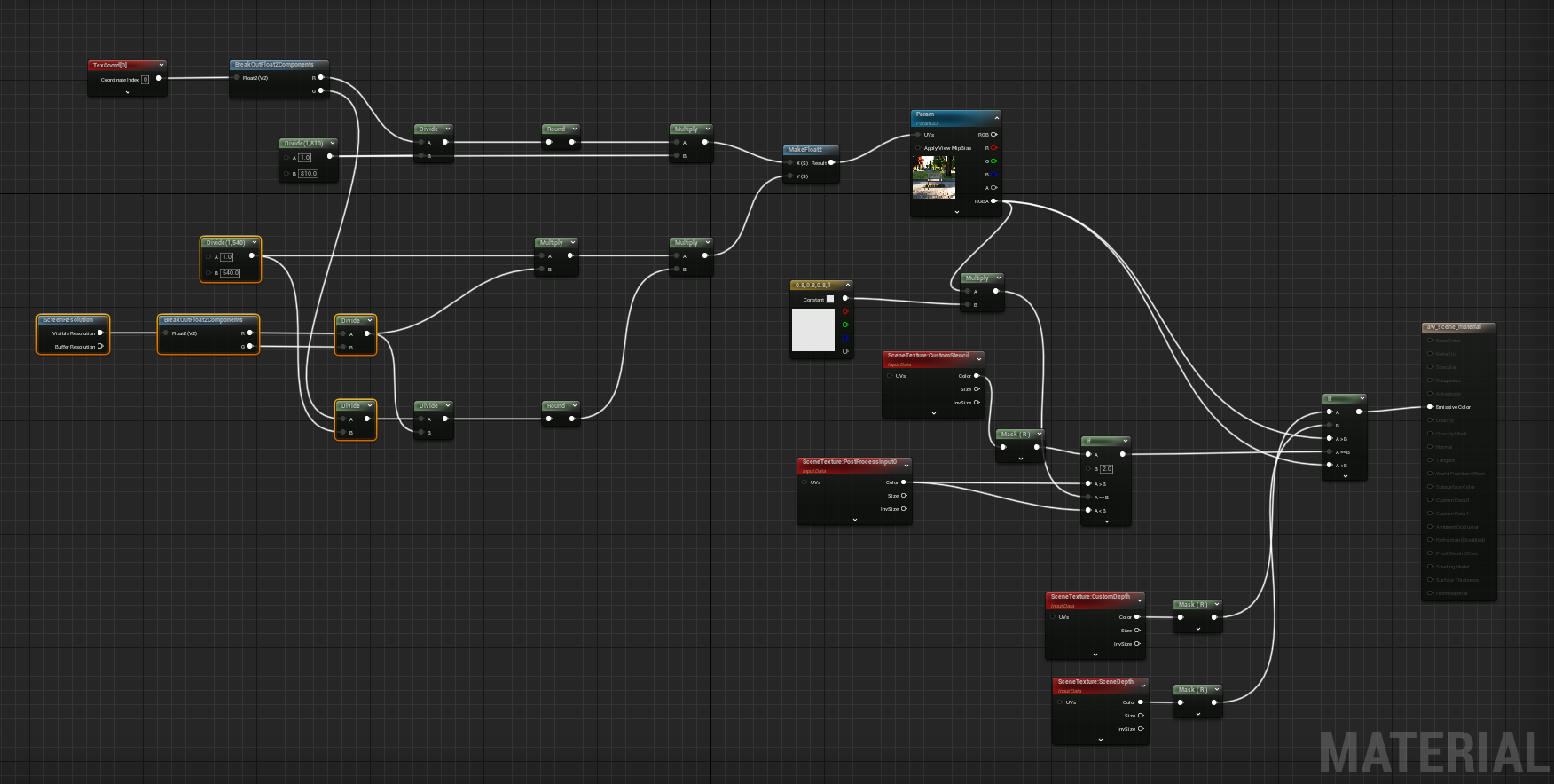

After some initial experimentation, I developed the following implementation for Ash Wednesday using Unreal Engine 5.2. Very simple maps were developed according to the game's narrative, using rudimentary primitives to mock up things like chairs and walls. These 3D elements were given a very simple depth material that simply mapped PixelDepth to Emissive Color.

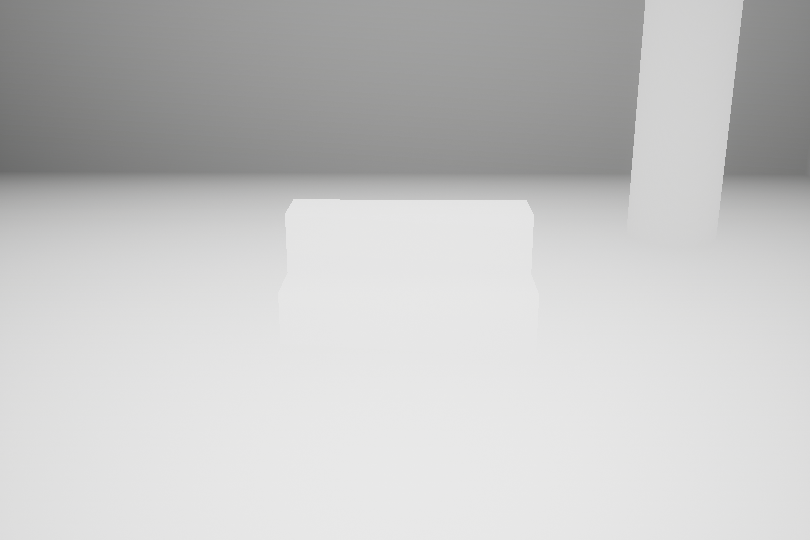

A special room camera blueprint was created to set up each room's shot. Once the level design was complete, each room camera was invoked to save an 810x540 (the working game resolution, chosen for stylistic reasons) screenshot of its view using the depth material. These rendered depth images looked like this:

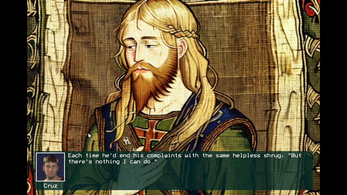

To create the room image from the depth map image, I used Stable Diffusion with the checkpoint model NextPhoto 2.0, whatever prompt was appropriate to the room, CFG 20, DDIM sampler, 80 steps, at 768x512 resolution, along with a ControlNet depth map in balanced mode with varying prompt/model ratios. The resulting image was then fed into img2img and upscaled using SwinIR_4x with .2 denoising. Finally, the 1536x1024 image was downscaled to 810x540. For example, the above depth map was turned into this room image:

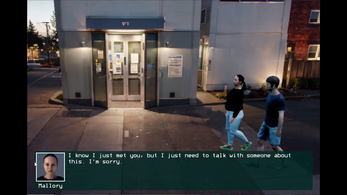

When the game is run, these images are fed into the room camera's Scene Capture Component 2D with a custom post process material that (1) pixelated the scene into an exactly 810x540 image for nonessential stylistic reasons, (2) rendered the pixels for any objects with custom scene depth enabled and closer than other objects, and (3) overwrote each remaining pixel according to the 810x540 room image texture:

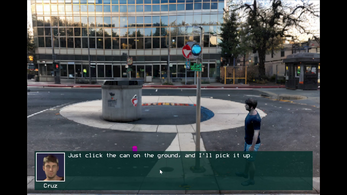

This allows for the player model (which has a custom depth stencil enabled to avoid being drawn as the room image) to appear in the scene:

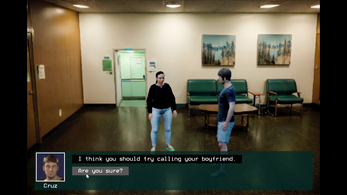

Through the post process material's depth testing, the player may be hidden behind the level cubes (note the avatar on the left center behind the bench):

I was also able to implement the concept by giving all level background objects a simple material that mapped ScreenPosition ViewportUV to room image UV, but I prefer the above technique as it does not require any change to existing 3D objects, materials, and levels.

Future Improvements

While my implementation is specific to Unreal Engine, the concept should be reproducible to many 3D engines, including very light-weight ones such as web and mobile-oriented engines.

The depth material could be skipped by creating an EXR screenshot from each room camera with depth information. This would be preferable for the same reason that the post process material is a better option than the ScreenPosition material mentioned above: it does not require changing existing levels, etc.

One of the biggest challenges with the existing implementation is getting Stable Diffusion to correctly register scale within the depth map. This can be greatly aided by having known "landmarks" such as mannequin within the map. Such a landmark could be on the side of the image and cropped out. Other techniques surely could be developed in this aspect, including adding an additional ControlNet training using normals outputted from the same camera.

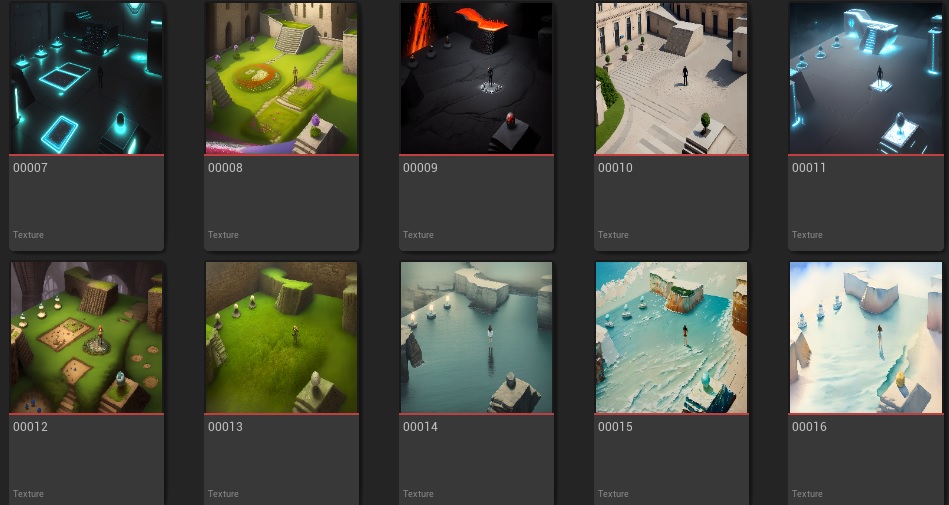

While the point-and-click implementation in Ash Wednesday is quite rough and sometimes poorly matched to the room image, I believe its AI-image driven design concept holds considerable potential for rapid development of static scenes. For example, using this technique with depth for a simple top down template map, I was able to generate a large number of variations of a level literally within minutes:

I hope other game designers/developers will try this approach, and explore further the use of AI imagery to quickly prototype, enhance, and implement 3D-interactable scenes.

| Status | Released |

| Platforms | Windows |

| Author | DomOfTheWoods |

| Genre | Adventure |

| Tags | 3D, Narrative, pigsquad, Point & Click, slowjam, Story Rich, Unreal Engine, Voice Acting |

Download

Install instructions

Open and extract the contents of the Zip file. With the "Ash Wednesday" directory, run "AshWednesday.exe".

If needed, optional redistributables are within Engine\Extras\Redist\en-us.

Two original OST MP3s are included in the game's directory.

Comments

Log in with itch.io to leave a comment.

i just want to say i love how you made a point and click game in unreal 5, it has inspired me to move away from unity.

Thank you! Good luck!

Hey! Loved the technical write up on your approach. Definitely unique in feeling. Can't wait to see what else you can do with it

Thanks, Mike! :) It’s interesting seeing how AI is accelerating and expanding gamedev!